VR TERROIR

VR TERROIR challenges the standardization of digital culture by creating hyperlocal avatars that absorb and are shaped by their environments, carrying their “lived experiences” like terroir. The objective is to visualise how context (including data, textures, and local inputs) can alter a digital identity.

Often, virtual representations of users in digital contexts are treated as static assets and limited to predictable and controllable formal and behavioural parameters that fit within the rigid walled gardens of the four big tech.

VR Terroir challenges this by proposing systems that are rooted in their specific location, history, and context.

The work is a video of a character going through an environment that is made by synthetic materials build with HLRS data and Stuttgart's physical environment.

With this vision and approach, together with the Super Computing Center we developed a working prototype within the pipelines and constrains of HLRS, that opens a research direction for the creation of context-aware avatars.

Exploring how environments can affect digital identities.

How does the accumulation of external inputs change a system?

I aim to create avatars that inherit lived experiences; that the pass of time and space has a visible effect on them, as in the physical world.

Why: Character design in XR applications lack the richness found in other media. This can be due to the historical technical restrictions of the mediums or the heavy platformization of the space.

What am I fighting?

Corporate positivism and standardisation that reduces digital existence to controlled archetypes and homogenisation of visual culture.

As in most digital areas, services and spaces offered by profit-prioritising entities tend to operate from a risk-averse neutrality, where companies enforce strict, controversy-free environments to avoid any potential backlash. This approach propels culture towards a hyper-sanitized mediocrity, a reality where only the safest, most generic and controlled content survives.

The concept of control and previsible content is key in the status quo of digital spaces, because economic markets are very uncomfortable with unpredictability and unforeseen changes, and digital spaces mostly operate within those markets.

That is why in most cases, the creation of digital identities is controlled in the form of limitation, camouflaging predictability as paradox-of-choice, where the user is provided with seemingly a lot of freedom as long as it happens within the predefined boundaries.

In the context of art and science, we can operate outside those constrains to prototype solutions that challenge existing rules.

We already see the concept of environment affecting beings in specifically curated digital experiences such as gaming, but those usually happen in closed systems where a pipeline of inputs-behaviour-outputs is predefined.

In VR-Terroir, I aim to build it as open and variable as possible, allowing for different inputs and behaviours, in order to question the aesthetic narratives of digital presence, going away from superficial-profyling avatars, towards a texture-rich systemic entropy approach.

Why this matters, and why in XR/VR?

Most avatars are presented as shells for the users to try-on; like costumes in a wardrobe that are sterile of the lived experiences.

Even state-of-the-art avatars in niche applications such as VRCHAT are expressive and detailed with physics and scriptable behaviours, but are unaffected by the environment.

We are constantly affected by external inputs, we are not the same after a digital experience.

What if your avatar would carry the signs of places you've been?

what if your avatar continues to exist while you are not inhabiting?

What if your avatar enriches itself by interacting with people and places?

What is it?

A system to create 3D digital characters that evolve over time based on images and contextual information.

Technically: Input a photo of a texture, generate a 3D avatar + a random-ish set of variables that affect the appearance and behaviour of the avatar over time and space.

The artwork:

A multi-screen video-installation that shows the concept of entropy for avatars in digital spaces:

A character evolving over time as its digital existence crosses different environments.

A location-based piece:

A workshop/session/tour to distill "what makes a space a place", where participants collect visual samples of interest.

Video/Web/VR piece: Images form the workshop are used to compose a digital space that avatars inhabit and are affected by.

👇 Development log

[WIP Dec’24-Jan’26] : A project from the S+T+ARTS EC[H]O residency

challenge: Virtual Representations of Users / Spatial Computing

context: Virtual Reality, Scientific Visualisations, Collaboration, Avatar, Design

keywords: Biases & Challenges / scientific datasets / creative potential / realism and abstraction / nonverbal communication / perceptions.

INITIAL IDEA & APPROACH

VR TERROIR explores the virtual representations of users. in collaboration with the High Performance Computing Center in Stuttgart (HLRS), who have a solution to explore scientific data in Virtual or Augmented Reality settings, allowing for remote and multiple users to be present.

It is an amazing tool, but there is a missing piece of the puzzle: how do we represent users there, in a non uncanny valley way, supporting non-verbal communication and with a right degree of realism and abstraction?

My proposal, in a nutshell is: building from the existing rich and cultural background of hyperlocal particularities found in popular culture, heritage, nature and space, physical and digital

We could call it the Avatar Terroir. same as in wine, where the terroir defines the complete natural environment in which a particular wine is produced, including factors such as the soil, topography, and climate.

Going from: a fake, generic, loud, bias, profiling, sterile and simplistic representations of self.

To: Using treats of the local culture, materials and place.

On the left, we place the current state of avatars, which are mainly an expression of Corporate Optimism, hyper functional, median, politically correct digital beings. From the cuteness of apple’s memojis, to Meta’s cartoon clones.

There are also places of extreme niche self expression, as virtual communities like VRCHAT, where a plethora creatures roam around polygons. Those avatars are wild and creative and fantastical, but are mainly rooted in digital culture, lacking a sense of place or context.

And here on the right, where I want to go, is the existing representations of beings and humans by popular culture. We do have a rich, tactile, physical heritage of creatures in europe.

From these down there from the basque country in northern spain, who dress with sheep wool and giant bells, to this guy from hungary. I do not aim to make a direct translation of all of this, but this is to illustrate the physicality I aim to achieve, also taking from identifiers of the localities, from the Kelp of Greece or the moss in some Alpine forests.

I also want to explore how the digital context within a digital environment can drive the representation: since it is a scientific visualisation environment, how avatars should look behave or be affected if we are exploring nano microbial data or outer space simulations?

It turns out that this have a name :)

I’ll tap into the Critical Regionalism movement in Architecture, that reject placelessness of postmodernism and reclaims a contextualized practice, using local materials, knowledge, culture while using modern construction technologies.

For insance, the image in the background is a map of the Bioregions in europe, geographical areas with a similar biodiversity and common geographies. This could be a starting point, to break away from country boundaries that tend to be simplistic and profiling. For exampleinstance, I feel more mediterranean than spanish; in some aspects I have less in common with someone in the woods of Galicia, and more in common with someone from the coast of Lebanon.

Could the type of trees, the style of the cities or the food grown and eaten on a certain place drive the look and feel of avatars?

If this sounds political, is because it is,

digital spaces are colonized with globalized corporative capitalist extractive practices, Google Facebook Amazon and Apple dominate the language, the platforms and the narratives.

And I believe we need to build tools to reclaim identity and agency.

And how to do all this?

With my skillset, Digital Craftsmanship, coming from an industrial design background, I’m familiar with the world of making things, and over the years I’ve been intentionally de-materializing my practice and designing for the digital space, because I don’t see a reason strong enough to add make more stuff to the world. So I have experience in 3D environments and digitization.

For this project I envision using 3D scanning, computer vision, generative textures, parametric design, computational photography, generative AI, motion capture.

As a hint, what I could see myself doing in this project is climbing a mountain deep in the carpats, 3D scanning a rock and feed it to a generative system that blends it with a scientific dataset and proposes avatars.

FRAMEWORK

Technical scope

Research

references / inspiration / links

links database → https://cunicode.notion.site/129454e7e114817e9ac2e1fc298a9241?v=129454e7e1148128ae00000cd13f0c98

Technical context

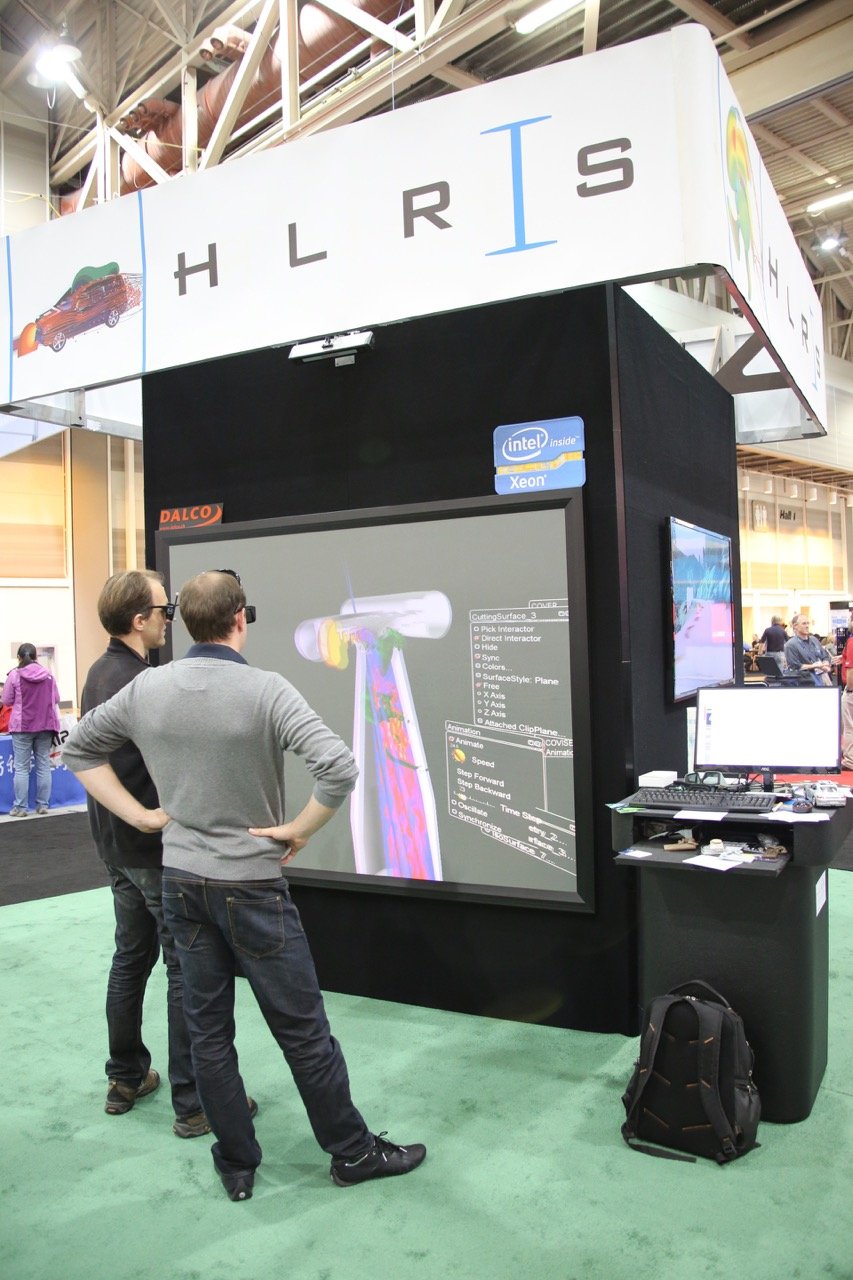

From the Host: HLRS

VR tools and facilities

Software developed at HLRS transforms data into dynamic projections. Wearing 3D glasses or head-mounted displays, users of our facilities and other tools can meet in groups to discuss and analyze results collaboratively.

Wearing 3D glasses, users can step inside simulations in this 3x3 meter room. Using a wand, it is possible to move through the virtual space and magnify small details.

Collaboration in virtual reality

When face-to-face meetings in the CAVE are not possible, software developed at HLRS enables persons in different physical locations to meet and discuss simulations in virtual reality from their workplaces or home offices.

Software

The Visualization Department at HLRS offers powerful virtual reality and augmented reality tools for transforming abstract data sets into immersive digital environments that bring data to life. These interactive visualizations support scientists and engineers across many disciplines, as well as professionals in nonscientific fields including architecture, city planning, media, and the arts.

COVISE (Collaborative Visualization and Simulation Environment) is an extendable distributed software environment to integrate simulations, postprocessing, and visualization functionalities in a seamless manner. From the beginning, COVISE was designed for collaborative work, allowing engineers and scientists to spread across a network infrastructure.

VISTLE (Visualization Testing Laboratory for Exascale Computing) is an extensible software environment that integrates simulations on supercomputers, post-processing, and parallel interactive visualization.

Other tools and technical solutions for this project:

Visual Language Models: for understanding images

Generative 3D models: for giving shape to concepts

Parametric Design: For computationally define boundaries and shapes

Gaussian splatting: For details and visuals beyond meshes and rendering

Computer vision: for movements and pose estimation

Depth estimation / video-to-pose

3D scanning: for real world sampling

photogrammetry / dome systems / lidar / optical scans

Image generators: for texture synthesis

Societal context

Understanding the role of avatars in culture

Bibliographic research at the library collection from the Museu Etnològic i de Cultures del Món looking for folklore representations of beings.

[non-human / human] representations in folk culture

Pagan / material-rich costumes / masks.

A good repository of material qualities of costumes is found on the work Wilder Mann by Charles Fréger:

photos: Wilder Mann by Charles Fréger

Other sources consulted include:

The photographies by Ivo Danchev, specially the Goat Dance collection

Masques du monde - L'univers du masque dans les collections du musée international du Carnaval et du Masque de Binche

Mostruari Fantastic (barcelona)

El rostro y sus mascaras (mario satz)

Analogies and parallels

Expressive avatars at edge digital cultures (Vrchat)

VR spaces

Costuming Cosplay Dressing The Imagination (Therèsa M. Winge)

Webcam backgrounds as a shy attempt to self expression

Relationship between the human body, space, and geometry [ Bauhaus ]

DEV / WIP / EXPERIMENTS / PROOF OF CONCEPT

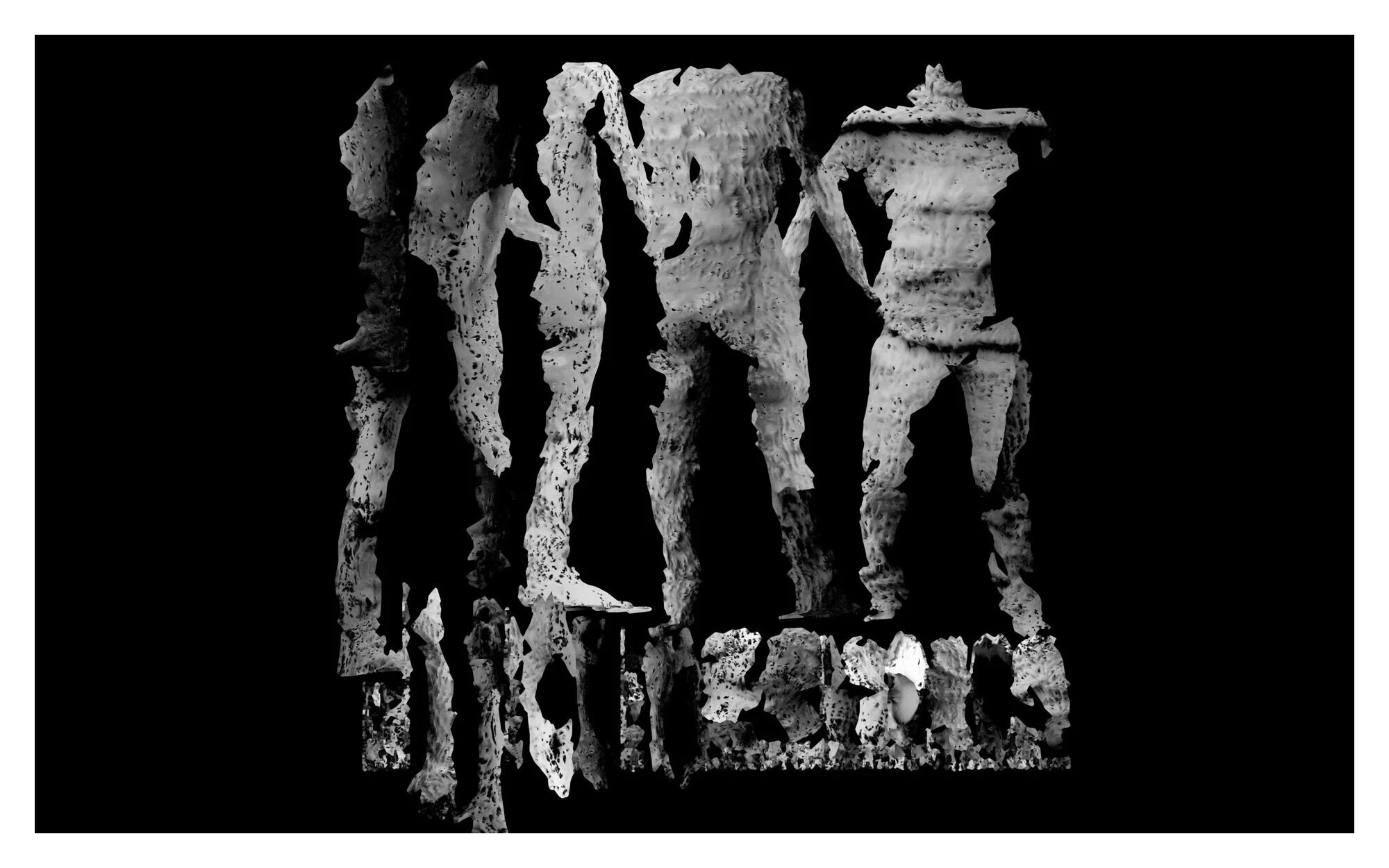

Proof of concept 01

Characters informed by the materiality of their context.

In this experiment, I combine the aesthetics, material and texture characteristics of a setting [microscopic imagery, fluid dynamics, space simulations…] and translate them to visual cues shaping and texturing a character.

Proof of concept #3

Using gaussian splatting to represent detailed material features

-> scan of the Triadisches Ballett at the Staatsgalerie Stuttgart

Text 2 3D models

challenge:

need to find: if a system can output a turntable of the creation before the meshing step, it could be possible to nerf it, and create a neural representation of the volume, without going through triangles…

COLLABORATIONS

HLRS sessions

Meetings with the HLRS team to discuss the project. Some of the things we discussed are:

VR continuum and role of the CAVE systems

XR expos and industry events

Remote Access to a GPU cluster

Access to photo-scanned materials and textures

Use-cases for avatars in HLRS

Visit / Jan ‘25

Demo of equipment, projects, use cases and vision

With the aim of including humanistic thought and sociological context in this project, a conversation was arranged with the Head, Department of Philosophy of Computational Sciences at HLRS: Nico Formánek

Some of the concepts discussed were:

How do we “represent”?

Representation is a choice

When something is defined/described (i.e in thermodynamics) there is a whole set of things that are undefined.

Every choice leaves something out. (as per Jean-Paul Sartre’s concept of "choice and loss" —that every decision involves a trade-off, meaning something is always left behind. Sartre’s idea of "radical freedom" suggests that we are condemned to choose, and in choosing, we necessarily exclude other possibilities.)

Oportunity for this project to make explicit what is left in its choices

The concept of positioning: in a social context

How conventions apply in digital spaces / VR?

Standing - relationships between two things

How context guides standing. (i.e music in opera)

References: Claus Beisbart

Virtual Realism: Really Realism or only Virtually so?

This paper critically examines David Chalmers's "virtual realism," arguing that his claim that virtual objects in computer simulations are real entities leads to an unreasonable proliferation of objects. The author uses a comparison between virtual reality environments and scientific computer simulations to illustrate this point, suggesting that if Chalmers's view is sound, it should apply equally to simulated galaxies and other entities, a conclusion deemed implausible. An alternative perspective is proposed, framing simulated objects as parts of fictional models, with the computer providing model descriptions rather than creating real entities. The paper further analyzes Chalmers's arguments regarding virtual objects, properties, and epistemological access, ultimately concluding that Chalmers's virtual realism is not a robust form of realism.

new book: Was heißt hier noch real? (What's real today?)

Visit / May ‘25

During this stay, together with Matvey from MSC, we visited the XR Expo ‘25 in Stuttgart where new tech and approaches were exhibited. While most exhibitors were targeting industry 4.0 clients and providing digital-twin solutions, I saw a couple of interesting prototypes:

A hand-held strap-less vr headset (modified oculus 3), ideal for quick-immersion, wthout the hassle to isolate the viewer from the physical surrounding, as it can be removed easily.

A haptic saddle-like chair that promises a natural brain-to-movement feeling

I also worked closely with the team at HLRs capturing videos and media from the CAVE system.

Visit - Sept ‘25

Part of this visit was dedicated to field recording, to capture sounds from HLRS, its interiors, machinery, building, outdoors and also Stuttgart’s environment.

The other objective of the visit was to work with the team to test and prototype the Minimum Viable Avatar, and we successfully managed to load it into the CAVE, fixed bones, and loaded textures. We also had time to visualize the environmental textures and the modified avatars.

Tech Sessions

Meetings with Carlos Carbonell to explore the technological soundess of the idea and preceding , existing and future solutions.

Session: History of identity in digital spaces and vr platforms

Session: Hardware setup.

Session: visit at Event-Lab at the Psychology department from the Universitat de Barcelona - where they carry out technical research on virtual environments, with applications to research questions in cognitive neuroscience and psychology. We were hosted by Esen K. Tütüncü who showed us their research projects, equipment, pipelines and vision.

Session: we’ve did a VRCHAT tour, exploring different spaces, avatars and functions.

Trying on avatars seems the most fun (for me)

It seems to be a popular thing to do there, as there are many worlds for avatar hopping.

Some avatars are designed with great detail, with personalization features, physics, particles and add-ons

It is interesting the self-exploratory phase of looking at oneself every time you get a new avatar

Portals are a fun to land to unexpected places

There is a feeling of intentional weirdness, where avatars, spaces and functionalities are strange for the sake of strangeness.

Photogrammetry in VR: some speces are built or contain 3D scans. Due to technical limitations, those scans look bad.

Some programatically built objects have rich features, such as collisions and translucency.

Scale is a powerful factor in VR. Being tiny or giant really changes the experience

Business Sessions

Meetings with Dr. Claudia Schnugg to discuss and explore how to shape this project for continuation beyond the residency

We reviewed artworks and artists that deal with similar issues or mediums.

A key component of the sessions was to build something that has continuation beyond the STARTS project. For this, I drafted some outputs that could be useful in different art circuits with different objectives, budgets and environments.

With Claudia we explored the potential of making something tangible in this project. As a curator and art expert she suggested going beyond a video piece and designing an environment to tell the story of this project that could fit different settings.

A recurrent question that comes up in the conversations is “What makes a space a place?”, the piece should reflect that.

Exploring the Problem

The core concept of VR Terroir is to explore how the environment can shape the existence of a formal and visual digital identity.

And this comes as a resistance to the standardization of digital spaces that are often provided and curated by BigTech that are averse to uncertainty and tend to build systems that favor the stability and predictability that their markets demand.

We see companies rushing to provide platforms for digital identity creation with the hope to be the user’s sole representation online, with the aim that the created avatar/memoji/profile will be carried across spaces and devices, so it can be algorithmically followed, extracted and targeted.

The way that BigTech is providing identity representation online is by offering a seemingly unlimited number of possibilities that are composed by a meticulously selected set of elements that will render each identity within the expected ranges; this means that you can craft to the last pixel of your avatar as long as it falls within the ToS of the provider, and once it is set it is static, it that won’t change, it won’t evolve, it won’t adapt to new environments, new experiences, lived moments or the pass of time.

I believe that this is bland and distracting, that avatars are boring and as expected.

Life is not like that, things change you, experiences and places slowly leave a mark on you, from inside and outside.

And I’m exploring this.

Experiment

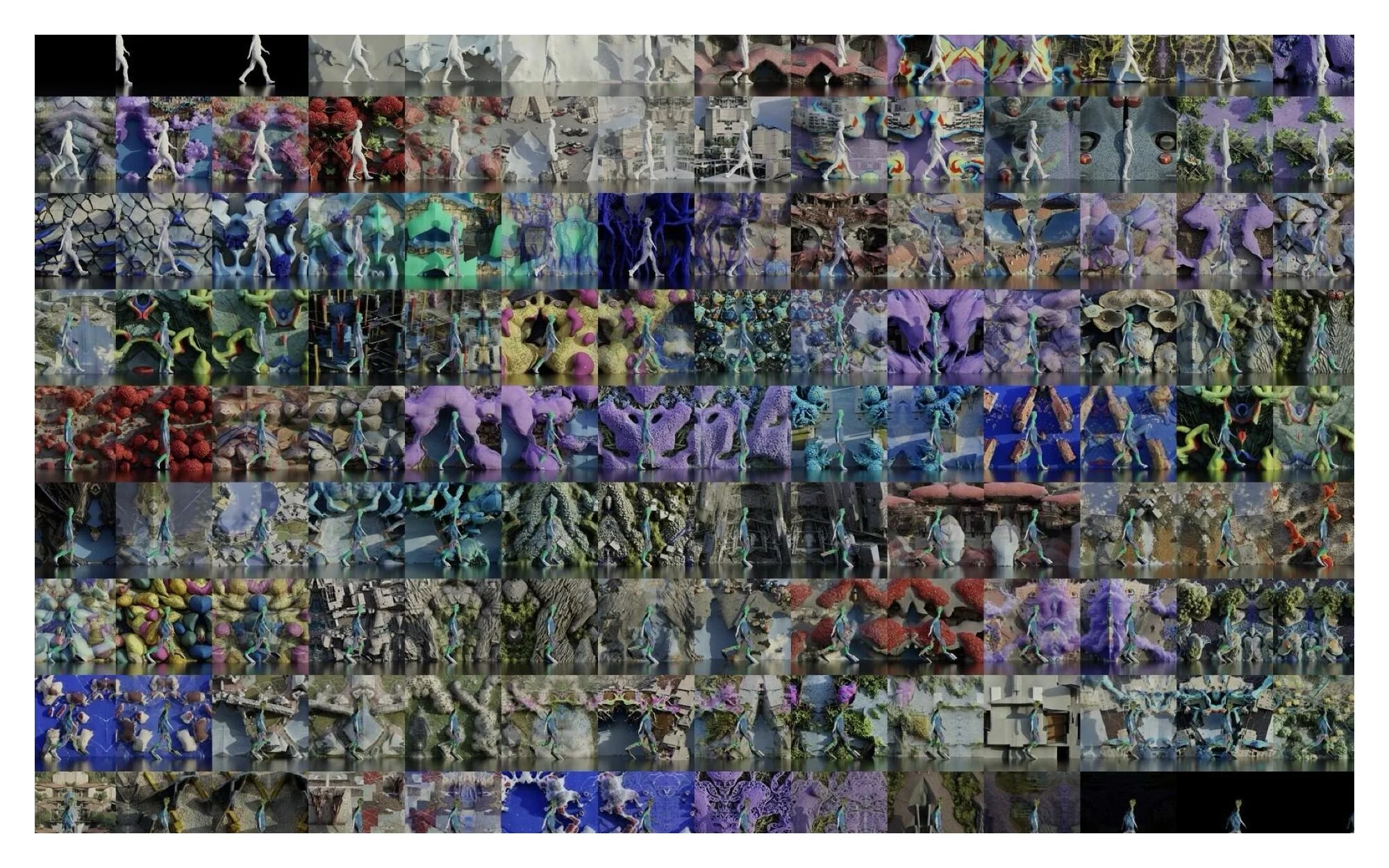

To make the concept tangible, I built a digital environment where a digital character roams and the environment slowly informs and shapes the texture and behaviour of it.

The resulting character mutates along the experience, ending being something somehow recognizable but modified.

The transformation happens slowly, almost imperceptible, and it carries along time and space.

The character: To be as neutral as possible, it is a textureless 3D scan of myself.

The environment: A 3D scene composed with synthetic materials made from images of scientific visualizations and physical textures and places around the HLRS.

Result: An environment-effected character Avatar

This evolution process is documented and presented as a video.

The final avatar is examined and dissected and presented as a physical installation of layered printed clothes.

Visual Material

To build the digital environment where the character exists, I needed some references.

During the residency visits at Stuttgart and HLRS facilities I collected a set of images that are the base of the visual qualities of this artwork.

First we took screenshots and video samples of the simulations and VR environments that are used in the CAVE system at HLRS including: scientific spatial-data visualizations, architectural and urban planning data, or medical and biological imagery.

Those images have distinct visual qualities such as saturated colors, synthetic textures, polygonal simplification, flat materiality, dense point clouds, 3D scanning blobs, MRI multy-layered volumetric images.

In parallel, photos of the physical environment where all this is happening were also captured.

This includes, the space, the CAVE setup, the servers powering the visualizations, building, the neighbourhood and to a larger extent Stuttgart itself.

This is a very subjective and personal part of the project as the images captured were taken from my own perspective and experience of being there. Thus those are my physical stuttgart textures.

🗒️ -> Due to this subjectiveness aspect, the work also considers a place-based-workshop for people to reflect on the algorithmic representation of places and their own experiences through the exercise of creating synthetic textures

Texture Generation

What makes a space a place

To blend the inside and the outside, the digital and the physical, I built a workflow that extracts the visual qualities of given images and composes a new one that is both and none at the same time.

I fed the images of the digital environments and the Stuttgart photos to the system and ran it unsupervised for 1500 generations.

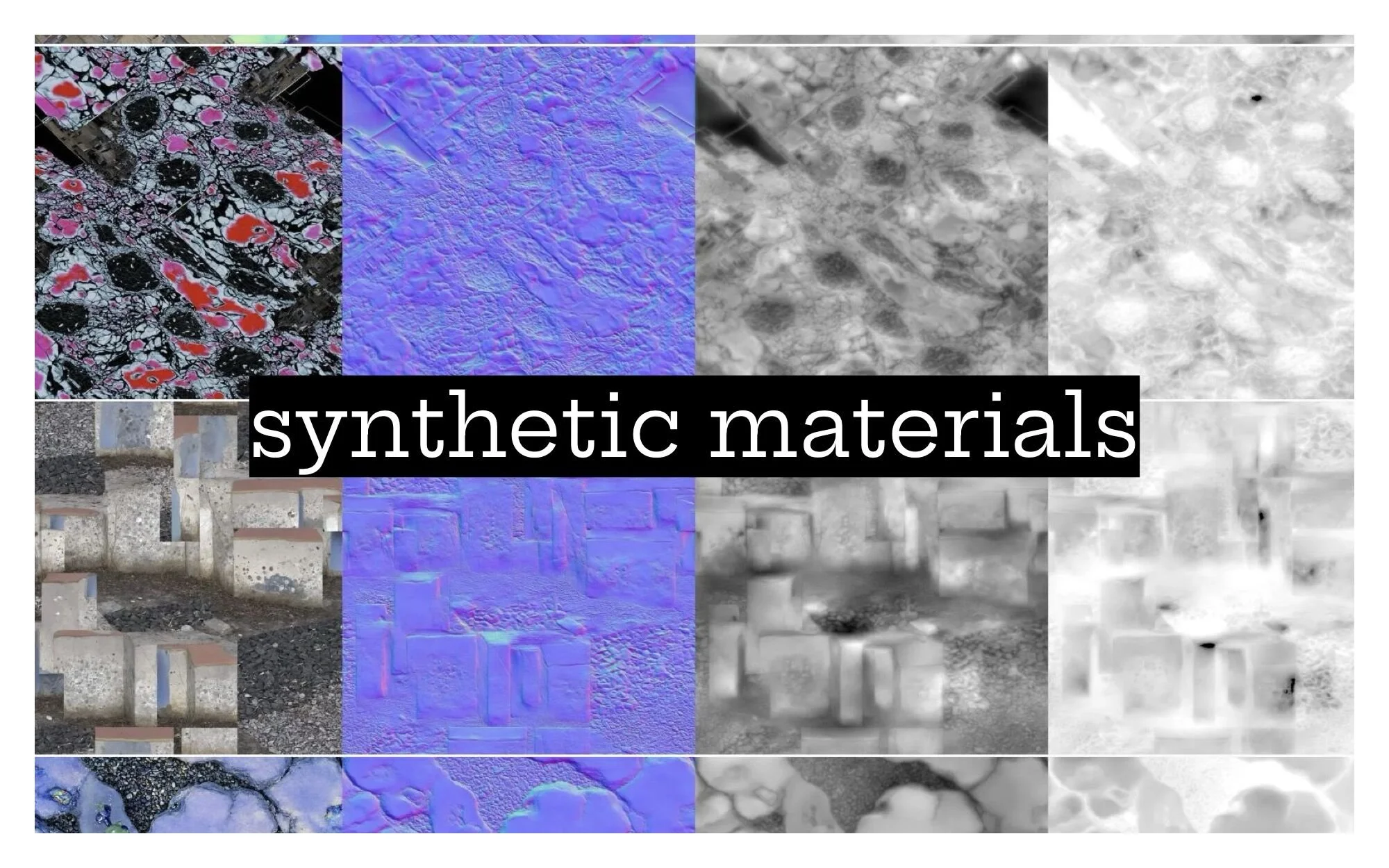

Synthetic Materials

To bring those textures back to the digital environment, I created seamless PBR (Physically Based Rendering) materials.

The steps involve:

Turning the images into seamless tiles: so they can be applied to larger areas than the texture’s boundaries

Create a normal map: this is how the light is supposed to behave if it hits a surface that has certain geometric characteristics. These maps not only are they very useful, but they are beautiful, where each pixel of the image stores a 3D direction vector, the surface normal at that point; These directions have three components:

R (red) → X-axis (left–right)

G (green) → Y-axis (up–down)

B (blue) → Z-axis (outward/inward)

I also generated estimated roughness maps: This is depending on the image, it guesses which part would be shinny vs matte, and creates a grayscale image representing the values of roughness

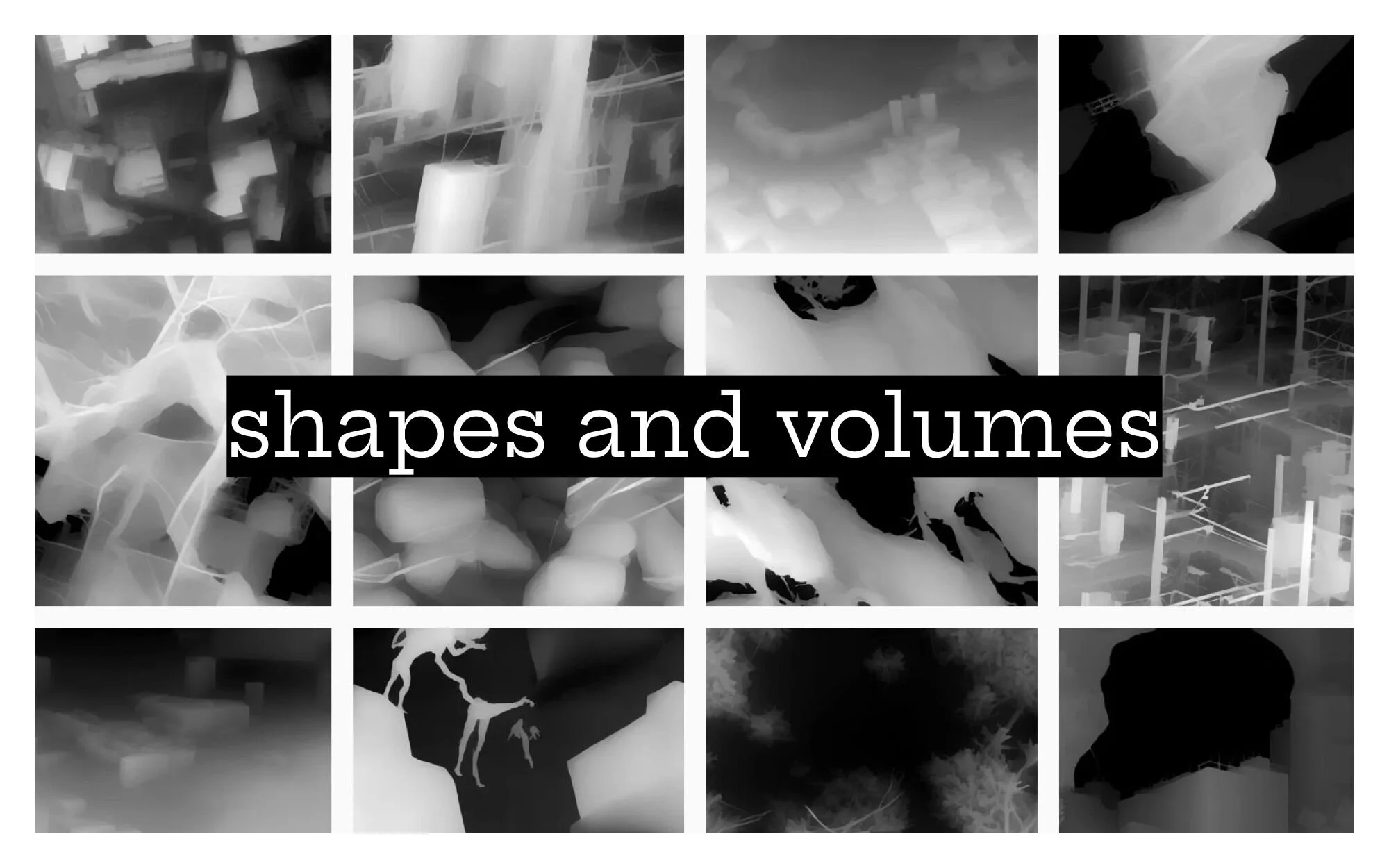

Generate depth map: This was achieved with a combination of models and approaches including MiDa’s and DA. The depth is used to create a displacement map that extrudes drive the geometry towards the normal of the surface in a range from 0-1. To be efficient, the grayscale depth-maps need to contain as much info as possible between 0-1; a typical grayscale image (8bit) has 256 values of gray, this means that a 3D software can choose between 256 positions to place the mesh. This value, in very detailed meshes is not enough, and banding appears (when you can see a pixelated effect similar to level curves in a topographic map). To bypass this issue, we need to work with bigger bit images; I ended up creating 32bit floating point depth maps, which contain about 4 billion values per pixel. (I over did it, but the meshes are smooth now).

8-bit - 256 gray levels - Standard images, monitors

16-bit - 65,536 gray levels - Photography, medical, scientific

32-bit ~4.3 billion (or float precision) gray levels - HDR, radiance maps, scientific data

Now we had fully functional PBR materials that condense the visual and textural experience of the digital and physical context of the HLRS

Scene Design

Since I wanted to showcase how an avatar changes over time influenced by the context, I needed to create the environment, the avatar and the event.

The character

I discarded using a standard 3D model or an avatar from an available platform because that would have involved decisions that I did not want to make and would be trivial.

Since the textures and the whole experience is subjective and personal, I opted for using a scan of myself. This was achieved via photogrammetry using Meshroom, and it was a special moment; I’m not one that spends much time around mirrors or curating my looks or poses, nevertheless I have a consciousness of my body and appearance. While doing the 3D scan and processing the 3D, I saw myself as a whole, from all the angles, I recognized myself, but this is not how I remembered me. I saw a middle-aged person, with its hunched shoulders and an overall pose that was a bit dissonant to the memory I had of myself.

Anyway, now I know better who I am and how the world sees me from all perspectives (at least physically).

I guess I accidentally made a self-portrait, and I liked it.

The environment

My first approach was to build a cube-scene where all would happen there, a kind of digital replica of the CAVE which itself is a replica of something real or synthetic.

Explored applying some modifier and effector relationships within the space, the character and the movement using. For this approach I had the help of Emile Lefebvre, who composed a Geometry Nodes setup and we tested the behavior.

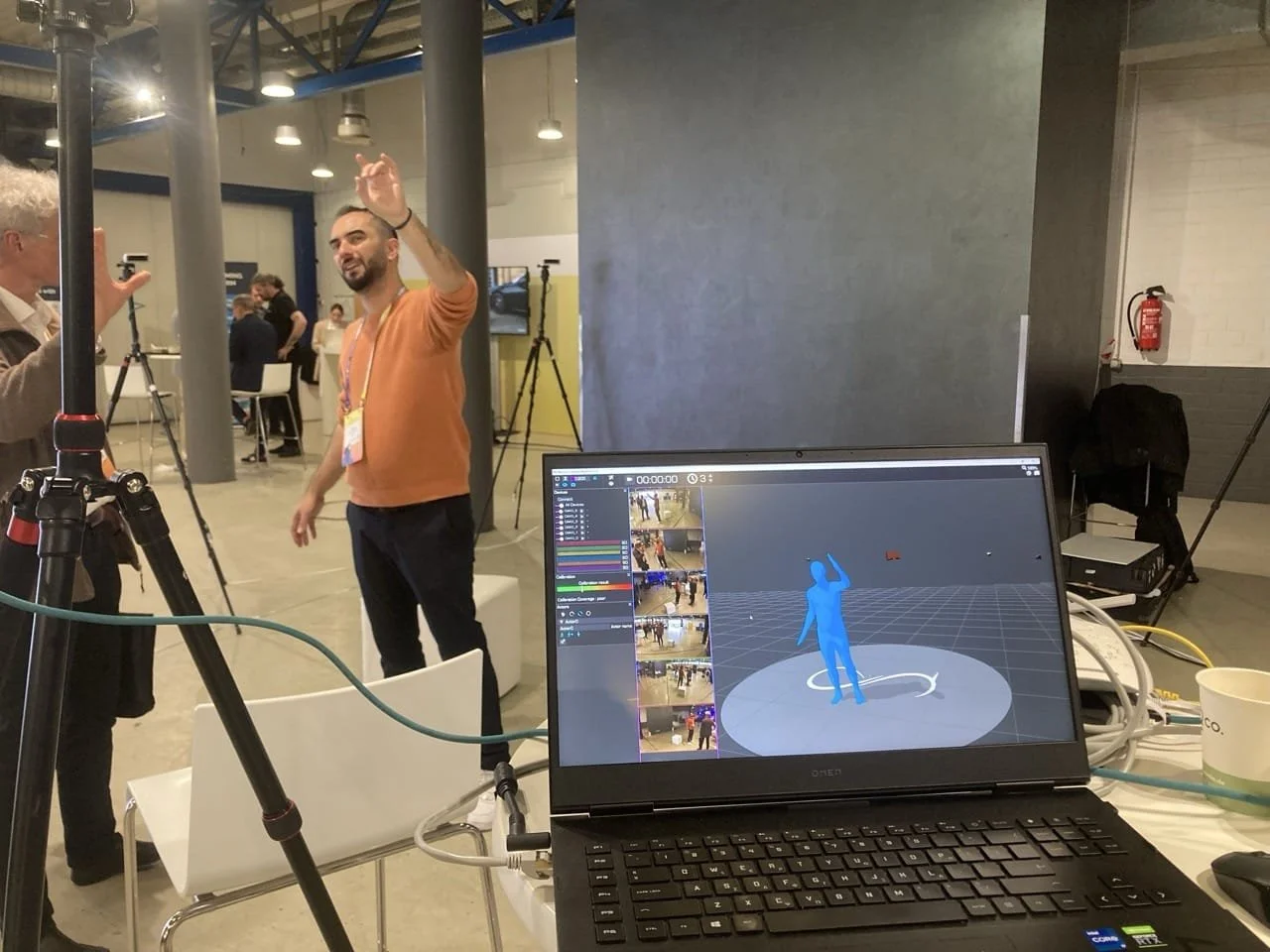

The movement

With the 3D version of myself, I added bones to it so it could be animated.

So I recorded myself in a space of 2x2x2 meters just being there, moving from side to side, waiting, standing, looking… with the idea to turn this video into movement instructions to drive the avatar.

The process took longer than expected and was computationally intensive, but I managed to do it all using open-source tools VIPER Blender Mocap . https://github.com/Daniel-W-Blender-Python/VIPER-Blender-Mocap

The results were nice, but spontaneous glitches and some bone movements appeared too digital and algorithmic, so I decided not to continue this approach.

Scene Production

I switched to a linear scene with a fine-tuned walking cycle, repeating it as if the character is going through a long corridor.

The camera follows the character and we see the background textures passing through, intersecting and affecting the avatar.

The background is composed by a smooth blending of 72 of the initial >1500 materials, and has a displacement applied that sometimes intersects with the avatar.

The effector setup applies time / place based reactions that gradually and permanently affect the texture and geometry of the avatar.

The final render is 27000 frames, and took 12 days to render on my local setup.

The result is a 1920x1920 square video.

The soundscape

Alongside photos and visual materials, I also did field recordings of HLRS and its urban environment, capturing sounds of the place and infrastructure, the servers and the devices that power the visualization tools along with sounds from the city and the environment around the HLRS.

Some initial experimentations with Granular Synthesis pointed the direction to build a soundtrack for the video.

With the help of sound designer and musician Gerard Valverde, we composed a texture rich background sound that accompanies the character as it walks through the environment.

The final audio is a crafted and reactive composition of field recordings and synthetic sounds.

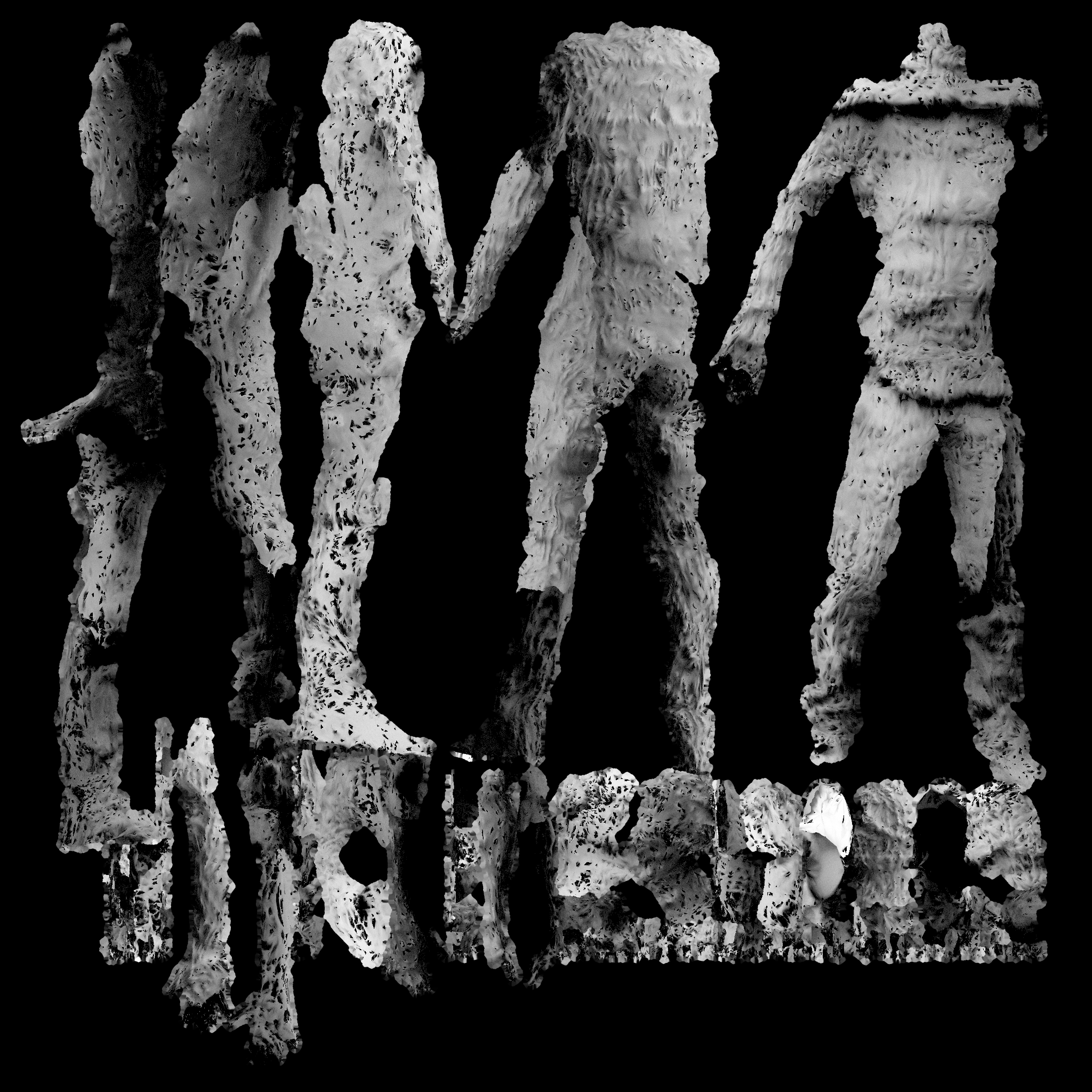

The Physical Avatar

To visualize the change that the character has gone through, I bring it back to physical.

An initial step in this project was to research and explore cultural representations of beings across Europe, and a common characteristic is the use of texture-rich materials like fur, moss, bark or stone.

I’m very happy that the resulting avatar has similar richness in detail and organicness, while following a technically very different approach (digital vs physical) but conceptually the same path: using the material and cultural environment as the driver of the physical appearance.

To make the avatar physical, I unwrap it (skin it), to observe all the parts and angles that compose it.

The initial approach was to 3D print it as a large scale panel, using polyjet multi-material tech, which allow for gradual transparency and gradual softness (shore).

A sample piece was produced in Portugal, but production price is beyond this project’s budget.

A workaround approach is CNC milling a bass-relief of the parts that compose the changed avatar. Ideally in an alabaster-like material so it could show the changes in thickness. At the time of writing this, not a suitable producer has been found.

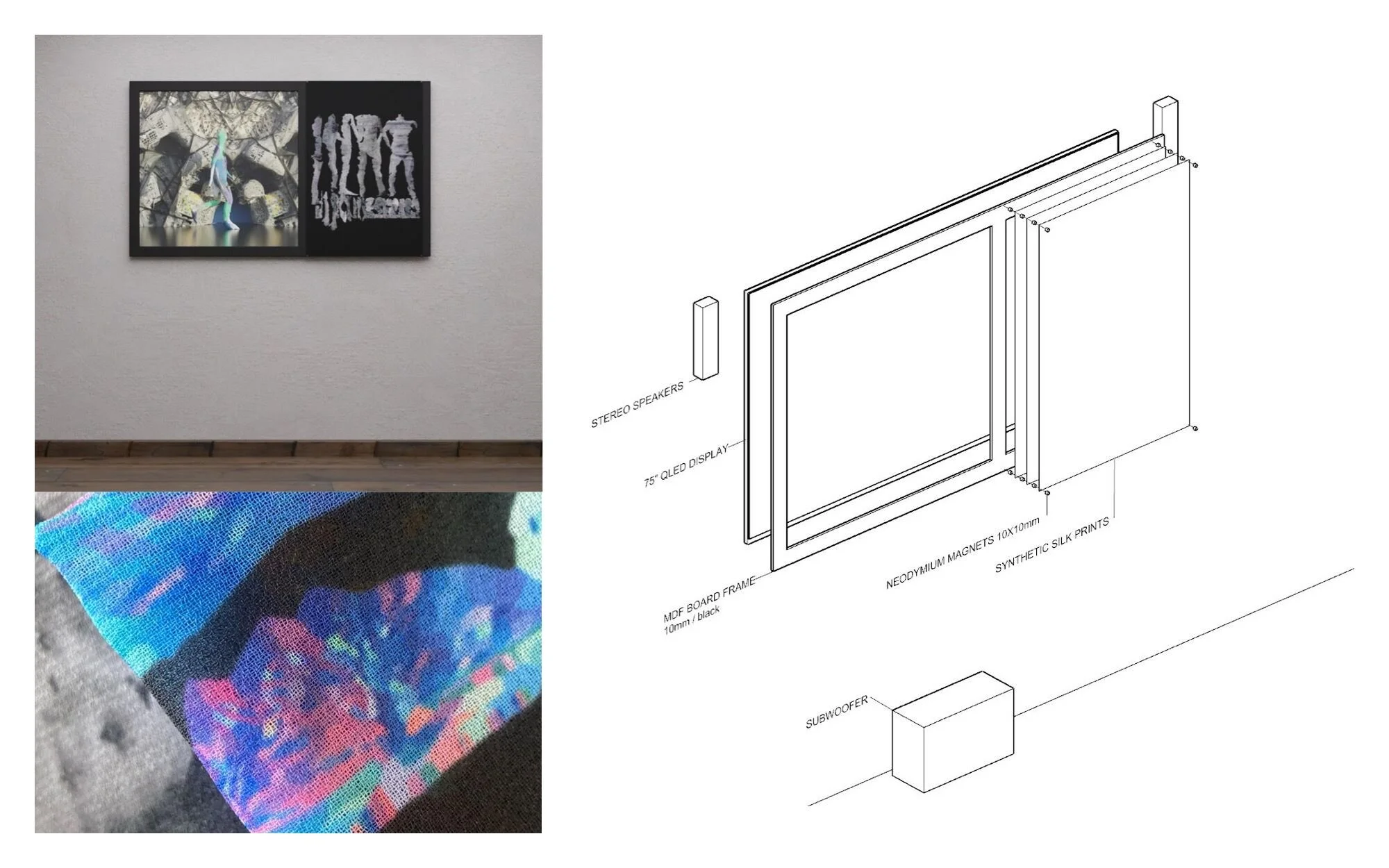

An effective and beautiful approach is to use fabrics to show the layers that compose, make, define the evolved avatar.

With a combination of light projection and translucent fabrics, we achieve a dimensional that resembles the layered MRI scans that we early saw at the HLRS.

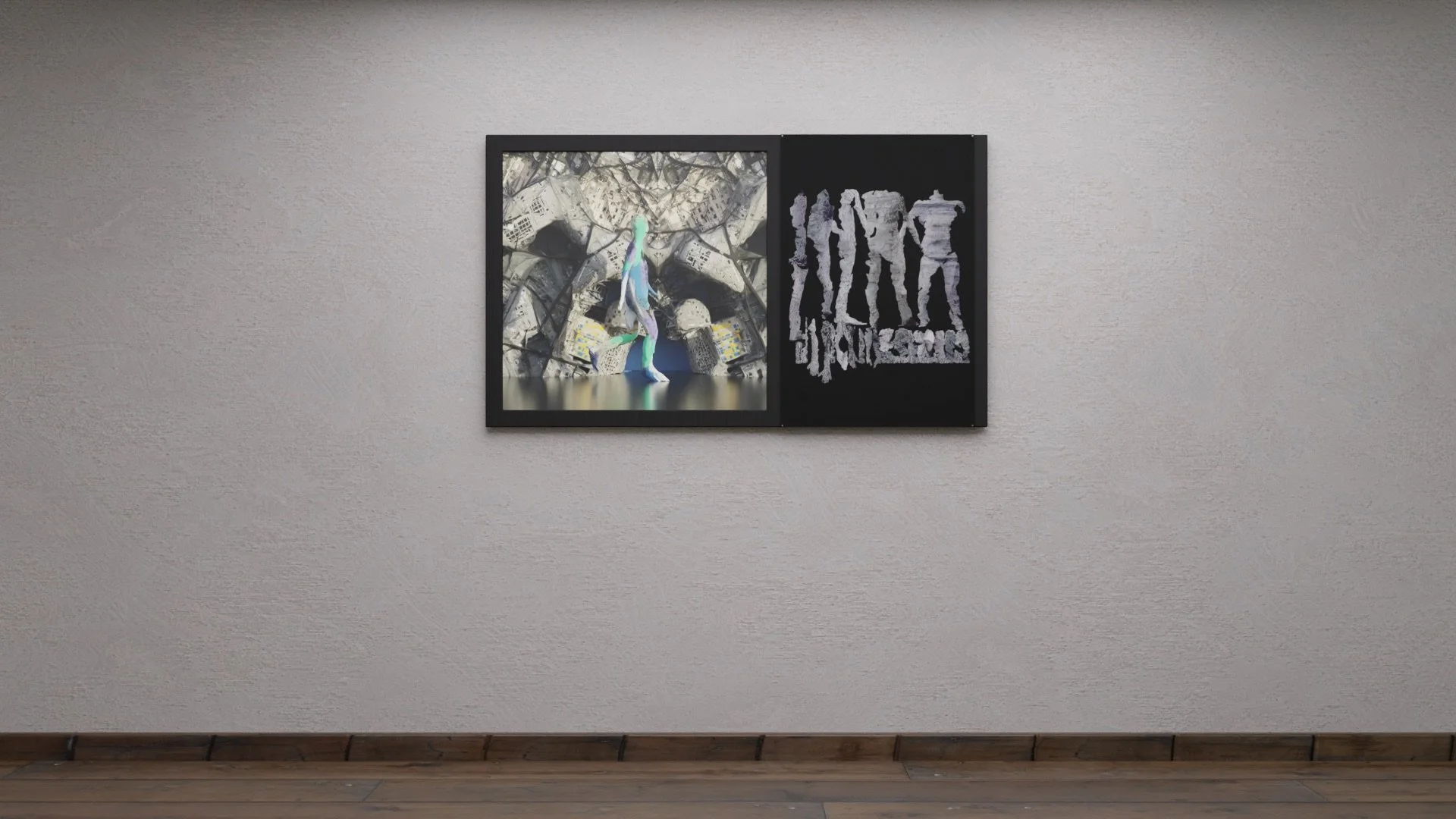

The final piece

VR terroir is composed by

Split video

Video A: a walking avatar being tranformed over time and space

Video B: a texture depth map of the resulting avatar.

This video serves as a backlight for the fabrics

Sound both videos share the same audio track

Synthetic silk printed fabrics

fabric 1: Roughtness map

fabric 2: Normal map

fabric 3: Albedo map

VR-Terroir setup

Equipment needed for exhibit:

75”inch QLED display

Black MDF frame with a 635x635 square hole in it

2 stereo speakers

1 subwoofer

Alternatively, if host does not provide a custom frame for split display, a workaround can be fabricated with a standard IKEA frame and a backlight panel.

alt : 75 inch + ikea frame + backlight panel

alt : 65 inch + ikea frame + backlight panel

The Workshop / A place-based event

While the project is executed particularly in the context of the super computer center and Stuttgart, the topic is generic enough that it can connect with audiences elsewhere. To localize the project with the location that is displayed, a workshop is proposed.

Through exploring the notion of place within digital spaces, I kinda created a workflow/process that consists of “understanding the digital, scouting the physical and merge it all together”, I enjoyed this process and this can be part of the piece itself, where a workshop can be run at the location that the piece is shown.

The workshop would invite participants to research and reflect on the algorithmic representation of a place (i.e how google broadcasts it? How does AI synthetises it? How mis/sub/over/represented is something?), and then do a fieldwork session to capture images and references to analyze and synthesize into composite materials.

The piece will have a place to integrate and display the workshop results.

The Minimum Viable Avatar

The VR.Terroir project aims to explore how digital environments can affect the appearance and behavior of the user’s representations, and this is done in a visually-rich manner through a video and a sculptural piece. From this approach, we can dilute the essence of it and apply it in other contexts; specifically the HLRS - Visualization Department’s XR setups and custom hardware CAVE and software environment. COVISE

The starting challenge that was posed by HLRS was “how to represent users in VR” in their research setting where a fully tracked body is not viable and programming inverse kinematics might be an unnecessarily overcomplicated workaround.

The geometry

The technical constraints of user-tracking within the visualization lab of HRLS are that the CAVE system only sees two points, the position of head and the hand that holds the command-stick.

Starting from here, a set of experiments were conducted to find a shape that could contain two driving points and represent presence.

Initially a cloak-poncho-ghost-like shape was explored, because that is a familiar morphology that is neutral enough to allow for customization and add-on design.

With the intention to further simplify it, a test with a simple plane subdivided enough to allow for deformation was tested, and found that by adding the correct bones and weights the user’s movements can be tracked nicely.

The behavior

To give this plane some presence, I went back to the ghost-like-shape idea and built a shape-generator that makes blob-like-tubular shapes with contour-transparency.

This shapes and geometries are the base for the minimal viable VR.Terroir avatar for HLRS

The proposed approach has been presented in a joint article titled XXX, and might be the base for further research.

Glossary